HAEC’s Consultation Process

HAEC conducted consultations with key stakeholders to unpack the barriers to conducting impact evaluations in humanitarian contexts.

About

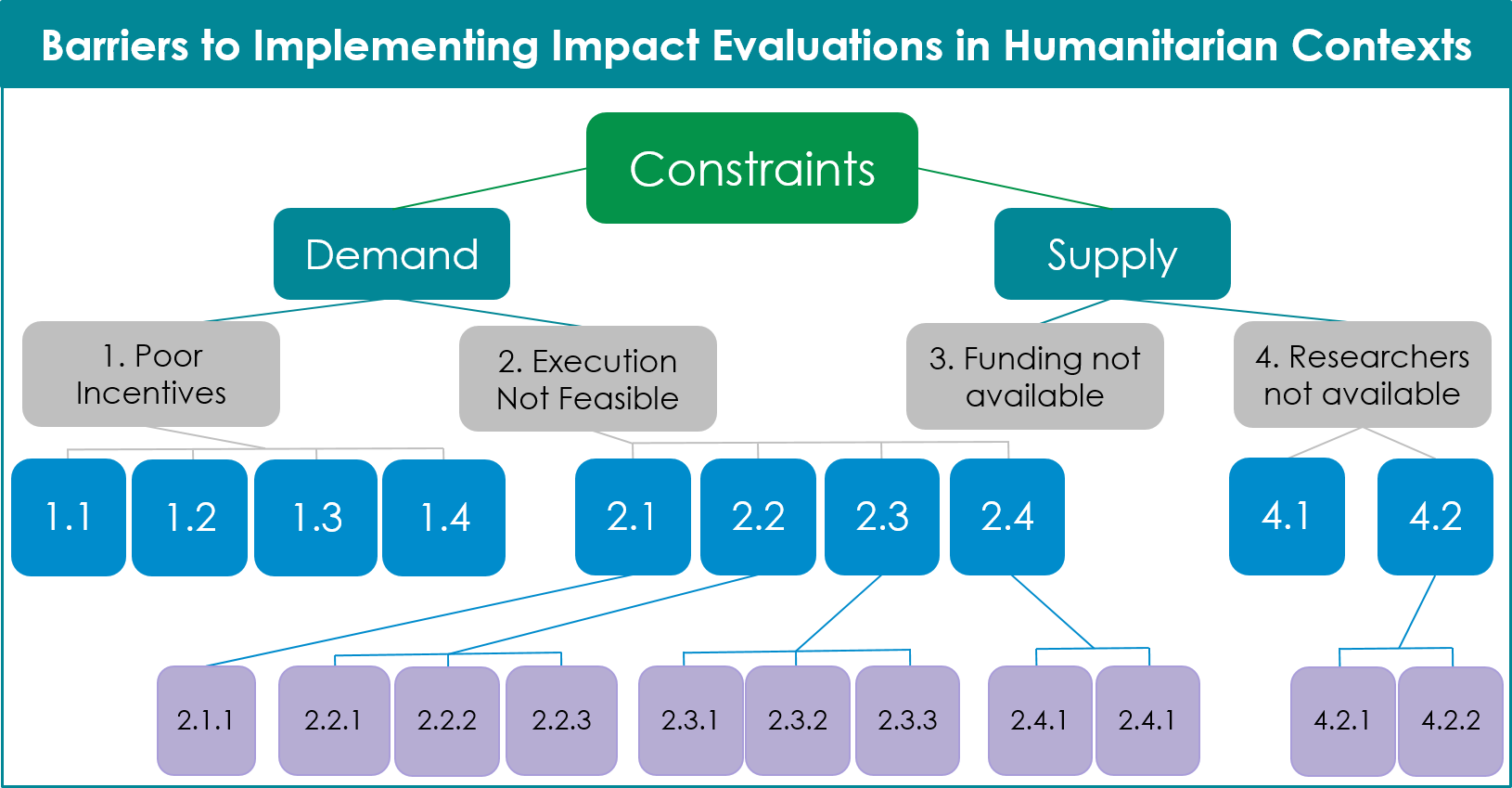

To inform HAEC's strategy for increasing the use of timely and cost-effective impact evaluations in humanitarian settings, the HAEC team conducted over 60 consultations with implementers, funders, and researchers to unpack the key barriers to conducting impact evaluations in humanitarian contexts. The team then applied a growth diagnostic approach to organize our findings according to possible supply side (i.e., inputs required to conduct impact evaluations in humanitarian contexts) and demand side constraints (i.e., factors that influence whether stakeholders perceive value from impact evaluations in humanitarian contexts).

Overview

Demand Side Constraints

- 1. Poor incentives

-

There are currently few perceived benefits and many perceived negative ramifications for donors and implementers alike to invest in impact evaluations.

- 1.1 Not required by funders: Currently, a large majority of humanitarian assistance funding does not mandate programming be subject to rigorous impact evaluation.

- 1.2 Humanitarian Assistance culture that programming is important and often urgent: Stakeholders perceive the benefit of life-saving aid as very clear and therefore question the value of diverting resources to evaluations when more direct aid is needed.

- 1.3 Perceived funding or reputational risks: Implementers fear that poor evaluation results will lead to loss of funding or reputational consequences.

- 1.4 Lack of awareness of the spectrum of rigor: Stakeholders do not always understand the rigor of different evaluation tools and the research questions they can answer. Specifically, there is a misconception that a commonly used approach of a pre-post comparison can measure a program’s impact.

- 2. Execution not feasible

-

There are a variety of resource, logistical, and ethical constraints that inhibit the setup and implementation of impact evaluations in humanitarian contexts.

- 2.1 Bandwidth constraints

- 2.1.1 Low IP bandwidth to coordinate: Implementers do not have additional bandwidth to support impact evaluations (e.g., through coordination with research teams, gathering needed data).

- 2.2 Design Challenges

- 2.2.1 Short timelines: Humanitarian assistance programming typically is both quick to start up and has a short funding period, resulting in limited time to prep research and limited time to measure outcomes.

- 2.2.2 Security constraints: Humanitarian contexts are often more volatile and insecure, inhibiting access by research teams.

- 2.2.3 Population movement: Population movement is more common in humanitarian contexts, defined by conflict or environmental crises which often leads to displacement. This makes tracking respondents over time in a research study more challenging.

- 2.3 Ethical Concerns

- 2.3.1 Costs reallocated from beneficiaries: Stakeholders fear that money spent on research is less money spent on life-saving aid.

- 2.3.2 Programming withheld from vulnerable populations: Impact evaluations require a control/comparison group which can involve withholding programming from beneficiaries.

- 2.3.3 Respondent burden from surveys: Humanitarian assistance contexts are typically highly saturated with organizations collecting monitoring data and additional data collection from these vulnerable populations is unethical.

- 2.4 Effective and aligned partnerships not available

- 2.4.1 Conflict from misaligned priorities: Tension between the desire for public generation of knowledge (i.e., evidence meant to inform questions for a broad group of stakeholders such as testing new, innovative interventions or evaluating in new contexts) versus private generation of knowledge (i.e., evidence to inform specific questions for specific implementers) can lead to discord, discouraging future attempts at partnership. Additionally, focus on public generation of knowledge may supersede the generation of findings that are most relevant for implementers.

- 2.4.2 Lack of awareness of the diversity of research partners: Implementers may not always be aware or incentivized to work with non-academic research partners.

- 2.1 Bandwidth constraints

Supply Side Constraints

- 3. Funding not available

-

There are limited options for securing funding for impact evaluations in humanitarian contexts.

- 4. Research staff not available

-

There are limited people with the skills needed to conduct impact evaluations in humanitarian contexts.

- 4.1 Low interest: Researchers may not be incentivized to conduct impact evaluations that are focused on implementer needs.

- 4.1.1 Low skills Low technical capacity of IPs: Implementing partners often do not have the technical skillsets in-house to design and execute impact evaluations.

- 4.1.2 Low contextual skills of external researchers: Researchers may lack an understanding of humanitarian context to adapt research methods appropriately.

- 4.1 Low interest: Researchers may not be incentivized to conduct impact evaluations that are focused on implementer needs.